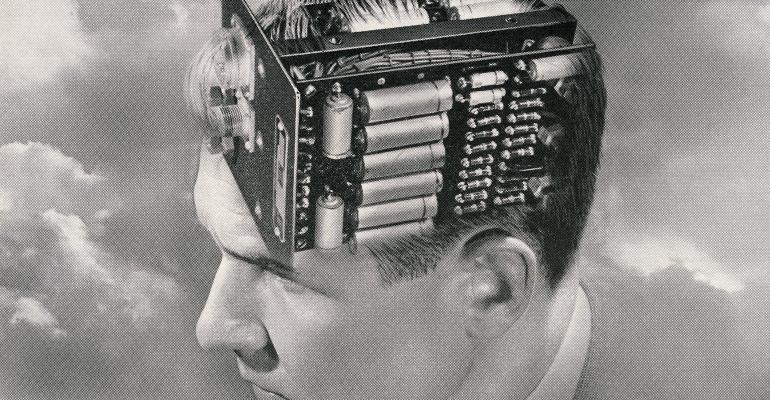

Let’s get real for a moment about the state of artificial intelligence in financial services and especially advisor technology.

A crescendo of hype continues to accompany AI wherever it goes. It is an exciting time; a new bubble is forming or has formed around software and application development in the field. And every big provider to the advisor market seems to be working on an AI offering.

So much so, in fact, that it can be time-consuming and tricky to sort real world breakthrough products that would impress an actual developer or computer scientist from something a smart, savvy marketing professional has cooked up.

What cannot be disputed is that there has been renewed investment in hardware to support the needs of advancing AI technology, something I think most advisors are probably unaware of. As I researched this topic, I came across something that reminded me I’ve lived through a similar period before. And that period’s consequences are such that it changed all of our lives to a significant extent—one that all of us are probably taking for granted.

I’m talking about the period in the early 2000s that resulted in modern global positioning technology (GPS) and wireless networking technology that we today call “WiFi.” In 1989, during a college semester serving in Army ROTC, I got to see a then state-of-the-art GPS unit. The receiver was fitted onto an external frame backpack and took up the whole thing; it was heavy.

Digital analogs

Fast forward to 2004 and I’m running a small editorial and testing team at PC Magazine and taking regular meetings with a senior executive at a GPS chipset company called SiRF. Every few months he brought by the latest chipset design, which was smaller, had more sensitive receivers and lower power consumption. At that point we were seeing them mostly used in dedicated outdoor handhelds or dedicated in-car navigation devices.

Within another couple of years they were becoming increasingly common in mobile phones—today they are ubiquitous. Most of us cannot imagine navigating to a new restaurant or getting around an unfamiliar city without this technology. Instead of a backpack, we are holding them in the palm of our hand or they are tucked unseen but for the touch screen on the dashboard of our car.

The same thing occurred less dramatically with what we today refer to casually and simply as WiFi. Chances are that you are reading this on a laptop connected wirelessly to the Internet (or maybe a tablet or even a smartphone). That WiFi name came into existence only when the Wi-Fi Alliance trade association formed in 1999. Up until the middle of the following decade, we were testing a plethora of wireless networking products conforming to standards like 802.11b that hardly rolled off the tongue or looked good in marketing materials.

For a cover story on wireless networking in May 2002, my team at the magazine rounded up and tested 20 different products that included an access point (still the thing your device connects with at home or an office that in turn connects you to the Internet) and a PC card, a bit bigger than a credit card, that you bought separately and inserted into a slot on your laptop. Today that same functionality is sealed up in a much smaller chipset inside your laptop that you are probably unaware of unless you delve deeply into your laptop’s specs or user manual. Your laptop today does a lot more, does it faster, runs much longer and probably costs several hundred dollars less than one a decade ago.

Back to the future: AI

Several specific technologies tend to get lumped in together under the artificial intelligence umbrella right now, and those are the ones with the most relevant practical applications currently. I say currently because there are many others that remain in a more theoretical state being worked on in universities around the world.

Among those practical technologies or concepts that advisors have heard about are machine learning, natural language processing and, more frequently of late, deep learning. The raw material each of these technologies consumes is data, mountains of it. In fact, it is this sea of data that makes any of this new ‘intelligence’ of any value. To learn, to analyze, to perform predictive analytics and slice and dice all this data in innumerable ways at the same time takes not just software but massive algorithms and programs and behind it all: computing power.

During the early years of this new AI revolution, hardware has not come up much in the popular or trade press. We largely had the gaming industry to thank for that. As it turns out, what are called graphics processing units or GPUs were and are quite capable for the early product iterations of machine learning and natural language processing. In other words, think of all the big data centers full of blade servers with GPUs on them chewing through all our Alexa and Siri data requests or others doing predictive analytics on how this ETF or that one will perform based on hypothetical changes in the market or world events.

It is here where I came across Jerry Yang writing about AI chipsets. Today he is a general partner at Hardware Club, a venture capital firm that specializes in, as you might guess, hardware. Yang, as it turns out, had worked for wireless networking tech company Atheros back in 2011 when global giant Qualcomm acquired it for $3 billion in cash (Qualcomm also acquired SiRF, the GPS chipset company, in 2015).

In a nutshell, Yang points out that as researchers have pushed the limits of GPUs from Nvidia and AMD, the two leading suppliers of these chipsets, and as those companies continued to raise prices on their chips, it has in turn fostered a crop of startups. These startups have designed or are designing chipsets from scratch more tailored to various specific characteristics of AI technologies that current generic GPUs lack. Some of them are also less power hungry or do not build up heat to the extent that buildings full of GPUs can, which could save on operational costs.

Some of the well-financed startups to keep track of are Cerebras, Graphcore and SambaNova, but there are quite a few others, including several that are being built and financed in China. It is too soon to tell whether any of them will become household names like Intel. More likely is that Intel, Nvidia or AMD—or Amazon, Apple or Google—will simply acquire them (each of the last three are building their own AI chipsets too).

What we so casually refer to today as AI and look upon with a bit of reverence (and pay a premium for) will tomorrow look primitive and probably unrecognizable tomorrow.